Data Integration Algorithm – Improve Modelling Accuracy

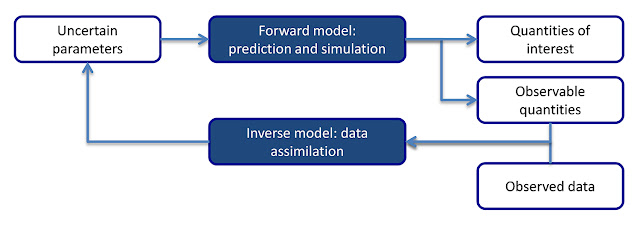

Parametric uncertainty is a remarkable error source in modelling physical systems where the values of model parameters characterizing the system are not clear due to inadequate knowledge or limited data. In this case, data integration algorithm could improve modelling accuracy by calculatingand reducing this uncertainty. Nevertheless these algorithms frequently need a huge number of repetitive model evaluations, incurring important computational resource costs.

With regards to this issue, PNNL’s Dr Weixuan Li together with Professor Guang Lin from Purdue University proposed an adaptive position sampling algorithm which could alleviate the burden produced by computationally demanding models and in three of these test cases, they demonstrated the algorithm could effectively arrest the complex posterior parametric uncertainties for the precise problems examined while at the same time enhance the computational efficiency. With great headway in modern computers, numerical models are being used regularly for the purpose of stimulating physical system behaviours in scientific field which ranges from climate to chemistry and materials to biology, several of them within DOE’s serious mission zones.

Several Potential Applications

However, parametric uncertainty often tends to ascend in these models due to insufficient knowledge of the system being stimulated, resulting in models which diverge from reality. The algorithms created in this study offers active means to assume model parameters from any direct and/or indirect measurement data through uncertainty quantification, thereby improving model accuracy. This algorithm seems to have several potential applications for instance; it can be used to estimate the location not known, of an underground contaminant source as well as to improve that accuracy of the model which envisages how the groundwater tends to get affected by this source. Two of the key systems which has been implemented in this algorithm are

- A Gaussian mixture – GM model adaptively built in order to capture the distribution of uncertain parameters

- A mixture of polynomial chaos – PC expansions which are built as a surrogate model in order to relieve the computational burden caused by forward model evaluation. These systems provide the algorithm with great flexibility in handling multimodal distributions and powerfully nonlinear models while at the same time, keeping the computational costs at the lowest level.

Though the algorithm worked well for problem connecting with small number of uncertain parameters, constant research with regards to problems linking to bigger number of uncertain parameters indicated that it is better to re-parameterize the issue or represent it with lesser parameters rather than to directly illustrate from the high dimensional probability density function. Besides this it also involves implementing the algorithm within a consecutive importance sampling outline for successive data integration issues. One of the example problems comprises of dynamic state estimation of power grid system.

No comments:

Post a Comment